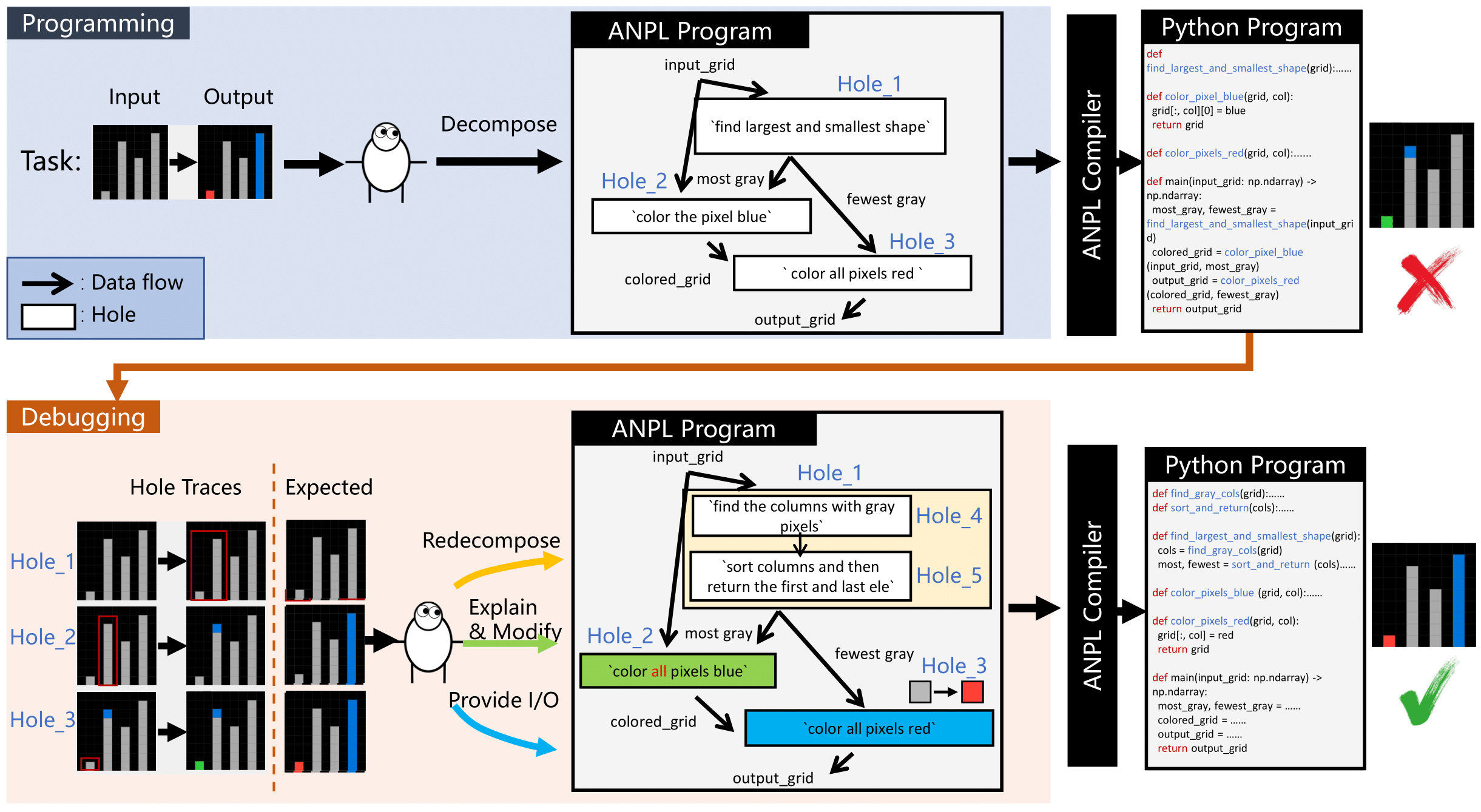

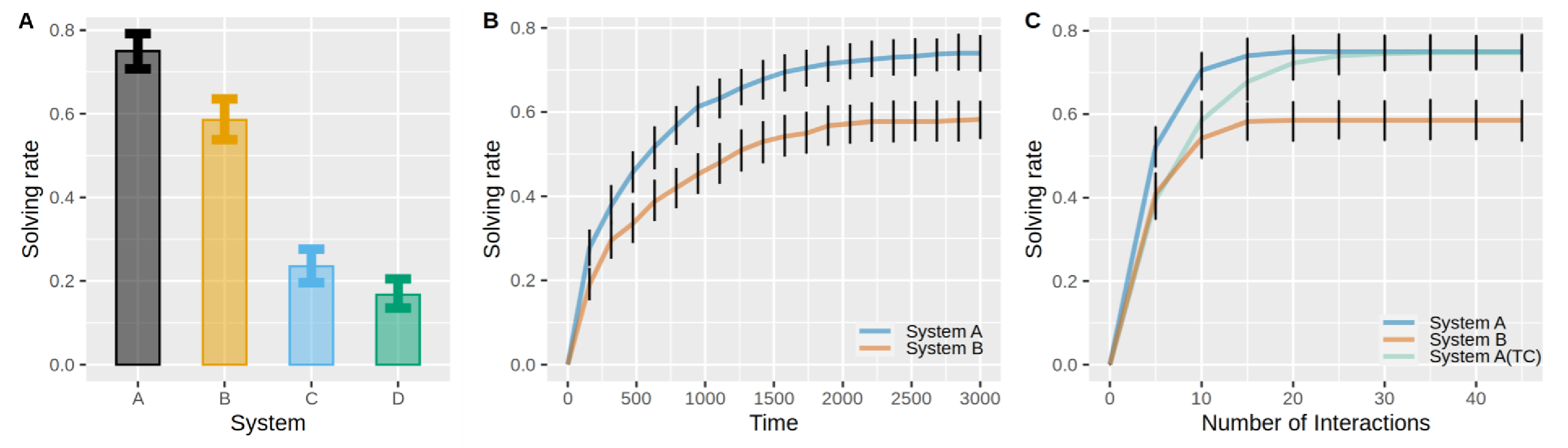

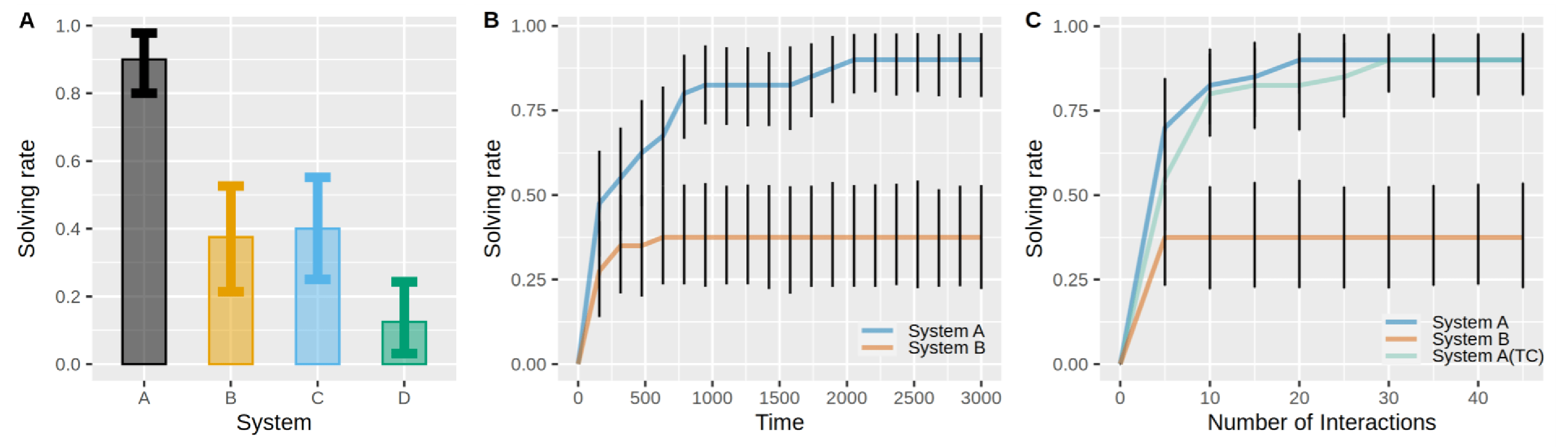

User Study

We conducted a user study on 400 ARC training tasks to evaluate the effectiveness of ANPL compared to the original ChatGPT (GPT-3.5-turbo). Specifically, we compare system A (ANPL), system B (GPT + interaction, i.e. ChatGPT), system C (ANPL without interaction), and system D (vanilla GPT), where systems C and D represent the solving rate of one-shot generation without further interaction using systems A and B, respectively.

Results of problem-solving rates. A: Solving rate of four systems. B: The relationship between solving rate and time consumption. C: The relationship between solving rate and number of interactions. Trace Calculated(TC) means the trace mode is considered into interactions.

Results of problem-solving rates when users can not see generated Python code and only interact with user interfaces and IO feedback. A: Solving rates of four systems. B: The relationship between solving rate and time consumption. C: The relationship between solving rate and number of interactions. Trace Calculated(TC) means the trace mode is considered into interactions.

In conclusion:

- With large confidence, ANPL performs the best and allows users to solve more problems, with a solving rate achieving 75.0% on average, while B achieves 58.4% (↑ 28.25%).

- Programming with interaction (systems A and B) is always better than programming without interaction (systems C and D).

- Even for one-shot code generation, ANPL without interaction (system C) performs better than vanilla GPT (system D).